My Ceph Cluster runs now! And it is amizingly powerful :-) Updates for Ceph Reef. Quincy is not yet the latest release anymore, I reinstalled my cluster with Reef (now…

Taming The Cephodian Octopus – Reef

My Ceph Cluster runs now! And it is amizingly powerful :-) Updates for Ceph Reef. Quincy is not yet the latest release anymore, I reinstalled my cluster with Reef (now…

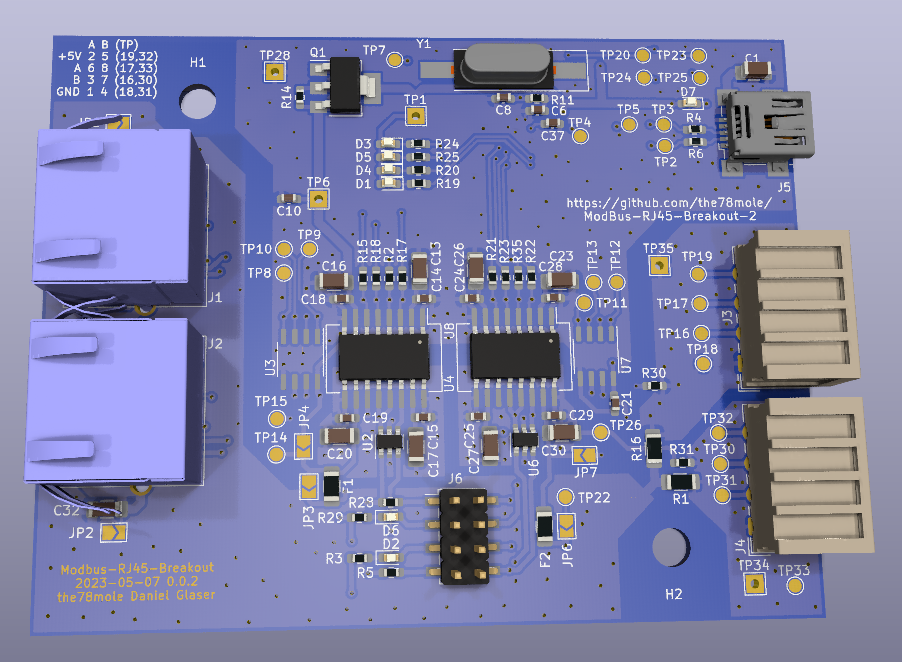

Again, I quickly developed a gadget to ease my life as a data collecting mole. ModBus adapters mostly have three drawbacks. Firstly, they have open wires, secondly, if you route…

If you bought one of my modules recently, it is also worth looking at the how-to project page for this module :-) Find a video how to assemble the board…

It's quite some time ago, I wrote my last blog post. But I had a lot to do and there have been quite action, creating some material for new posts.…

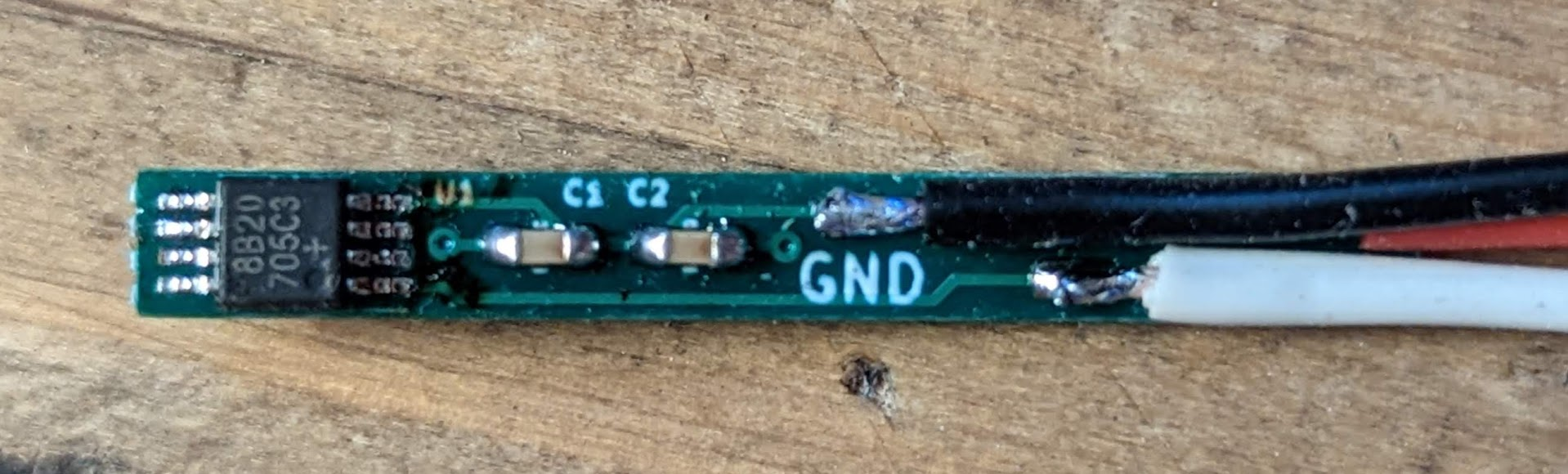

As always, I want to first quickly explain, what brought me to that project... If you look around in different stores and on aliexpress, you can find a ton of…

The Problem I Encountered... Just after installing the Pulse IR and the Tibber Bridge, I was quite a bit frustrated about the continuity of the Tibber data. This was not…

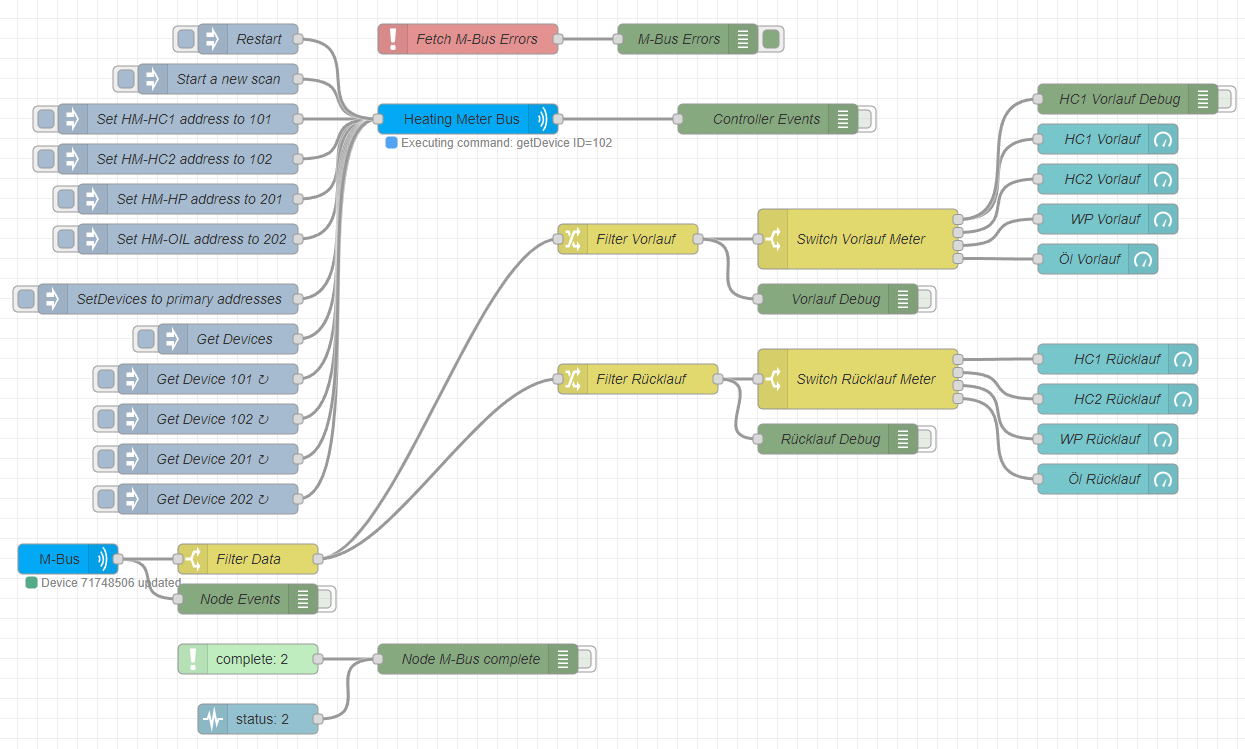

OK, guys, as you may have noticed when reading my post about my new heating system, I have three heat meters installed that have M-Bus connectivity. I also wrote some…

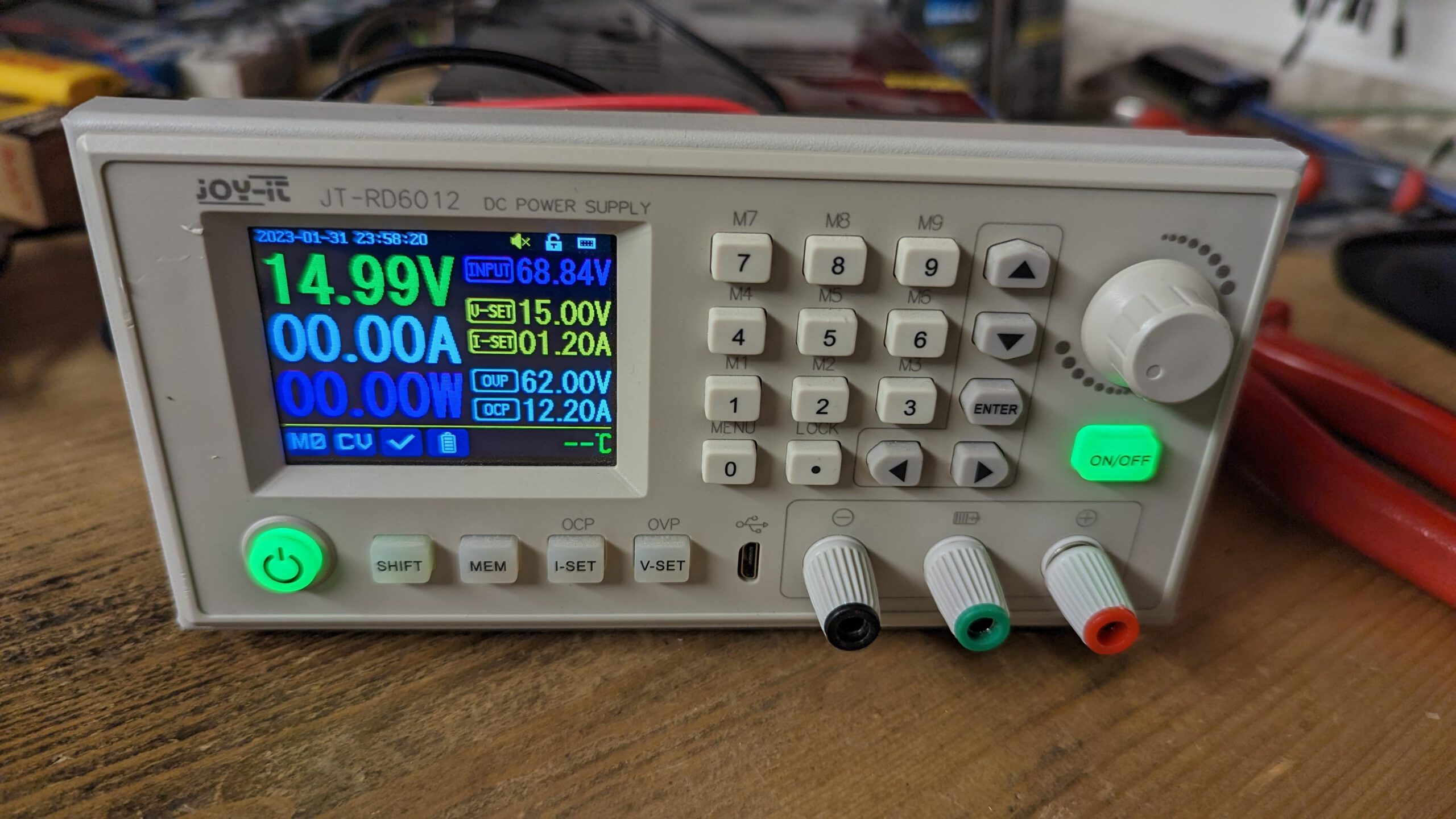

OK, you'll definitely believe, moles are crazy. You would ask, for what do you need a laboratory power supply that is controlled by your smart home (Home Assistant)? It's easy,…

Introduction Previously on this series, a payload was obtained from a meter over the EN13757 protocol. Chapter 6.3, in a nutshell, means a variable-sized array of data blocks. Each block…

Good day dudes and dudettes! I'm into software system architecture at least as much as I'm into coding. Otherwise, I could go on forever and a day about myself but…