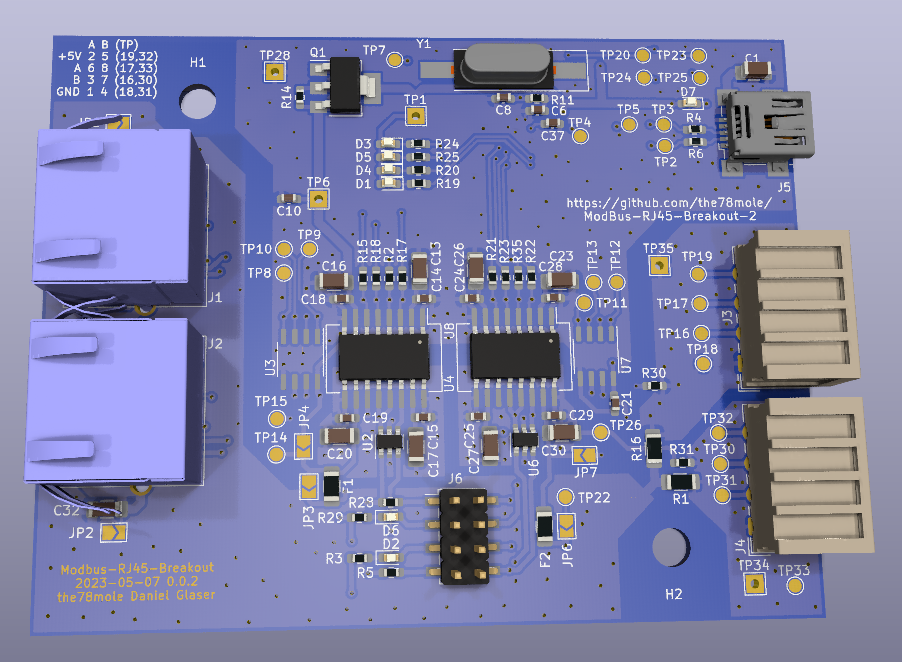

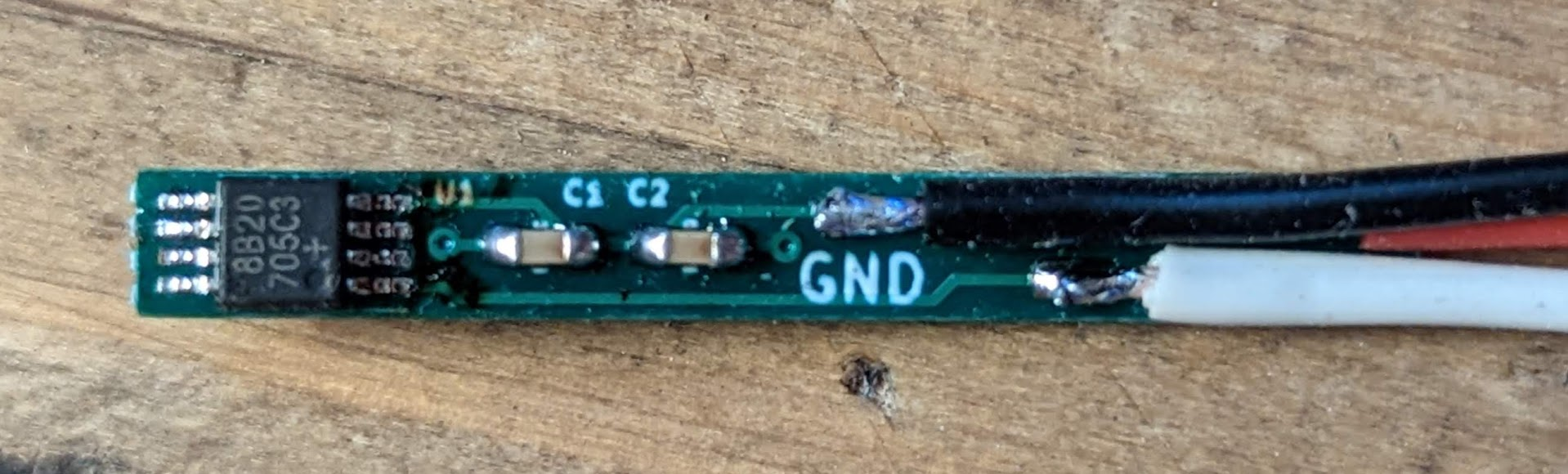

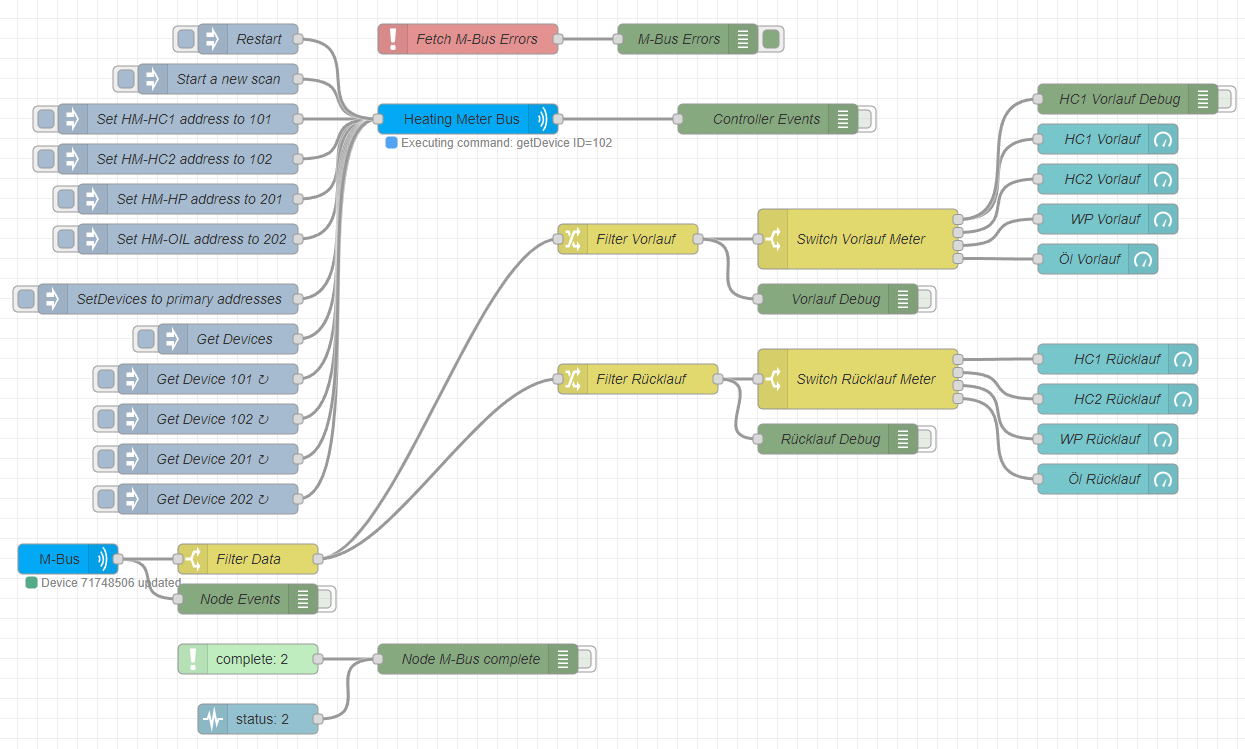

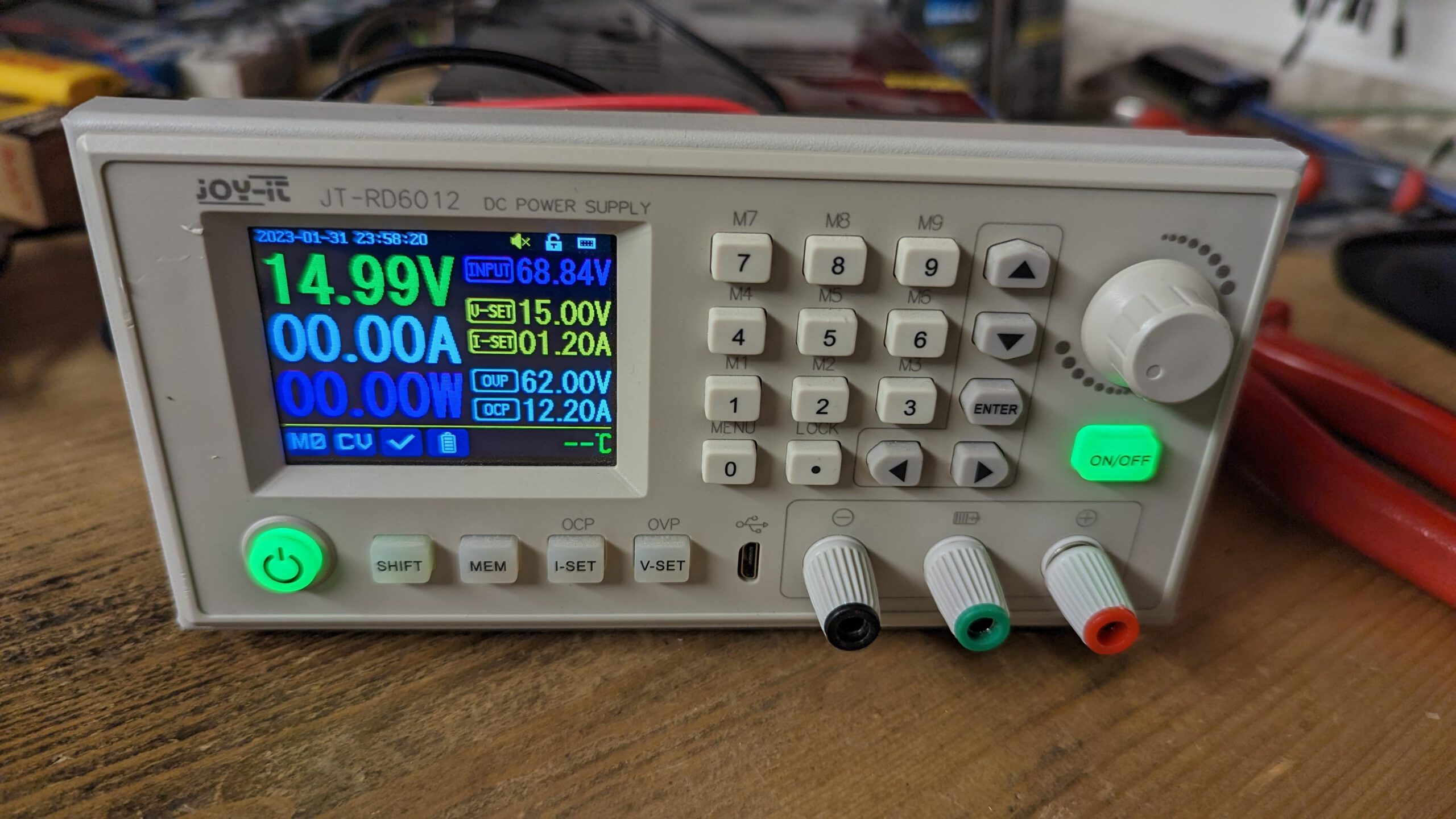

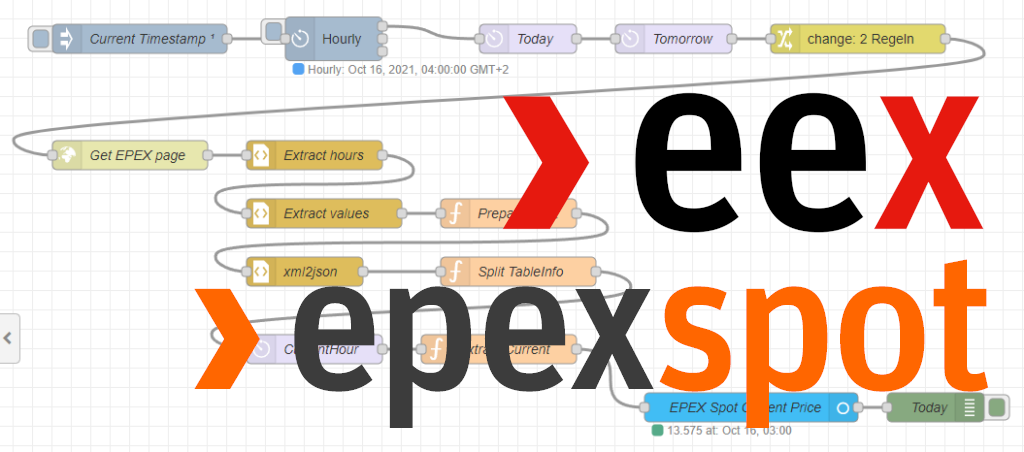

Again, I quickly developed a gadget to ease my life as a data collecting mole. ModBus adapters mostly have three drawbacks. Firstly, they have open wires, secondly, if you route…

Intensive Underground Metering – An RJ45 Breakout for Connecting Your Meters Through Ethernet Cabling